Your employees are using AI, so what are you doing about it?

Your employees are already using AI, and often without your knowledge.

The problem starts when they’re: pasting sensitive client data into ChatGPT, using browser plugins to summarise confidential information, or deploying unvetted AI scripts to automate internal workflows.

These tools may improve productivity, but they also introduce serious risks: data leakage, compliance breaches, and decisions based on inaccurate or hallucinated outputs.

Shadow AI refers to the unsanctioned use of AI tools by employees without oversight from IT or compliance teams. According to Microsoft’s Work Trend Index, over 78% of knowledge workers use their own AI tools at work, often bypassing formal governance.

Why Shadow AI is a problem

The risks are not hypothetical. Sensitive data input into public AI services has surged. These tools often lack encryption, audit trails, or data residency controls. Worse still, they may store data across borders, violating compliance and even security laws.

Beyond compliance, shadow AI undermines trust. Hallucinations (AI-generated factually incorrect answers) can mislead users and damage reputations. Without governance, employees may unknowingly rely on biased or inaccurate models, making decisions that could harm your customers, partners, or the business itself.

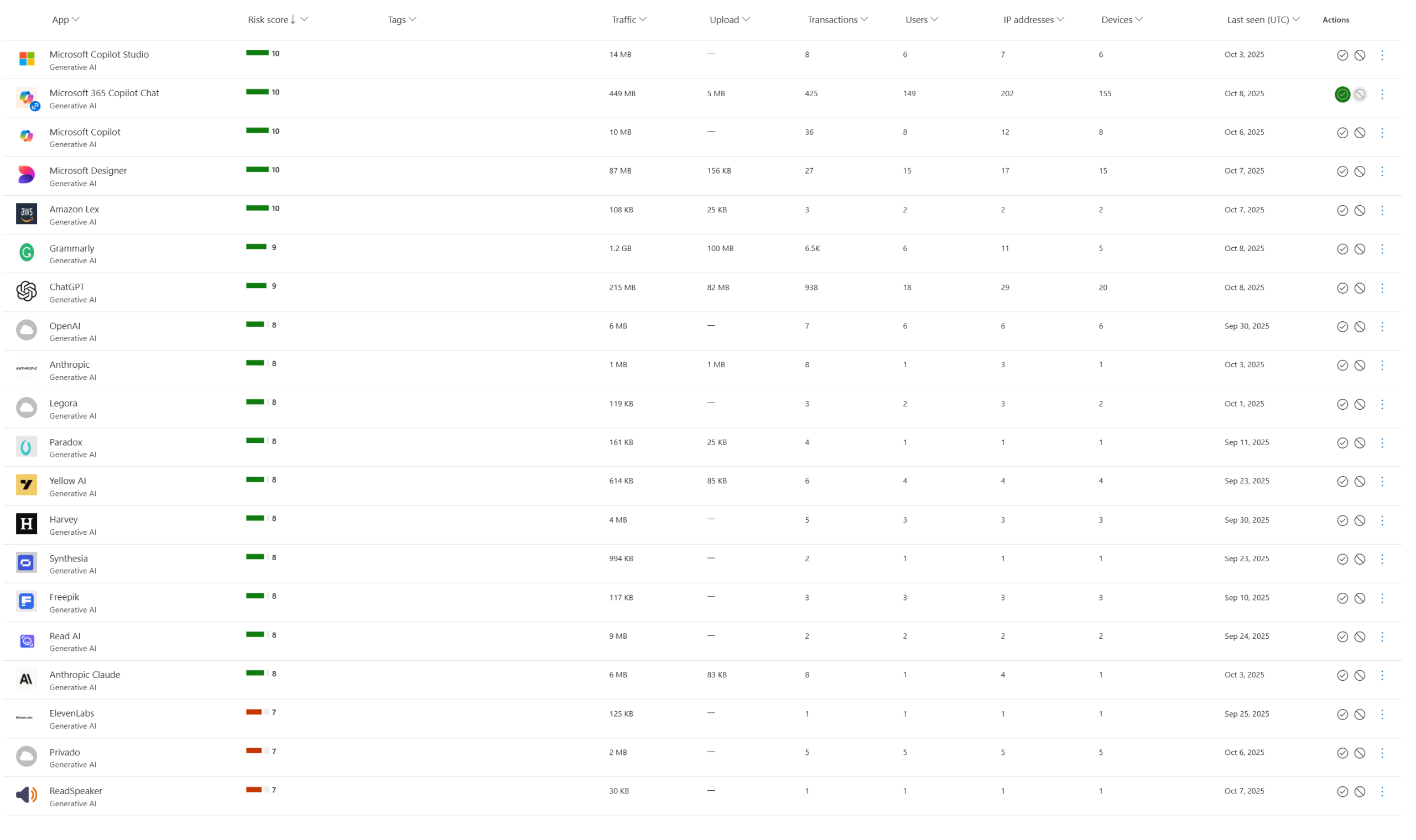

The screenshot below shows a breakdown of AI tools being used across an organisation, highlighting that IT teams may be unaware of the extent of usage.

What Should You Be Doing?

To use AI responsibly and avoid the pitfalls of shadow AI, organisations should:

Discover

Use tools like Microsoft Defender for Cloud Apps to identify unsanctioned AI usage.

Govern

Apply policies through Microsoft Purview and Intune to control data flow and access.

Educate

Build awareness and skills across teams to promote responsible AI use.

Deploy Securely

Introduce enterprise-grade AI tools like Microsoft Copilot, which offer built-in compliance and control.

These steps align with Microsoft’s best practices and the lessons learned from large-scale deployments across government and industry.

How Quorum Can Help

Quorum can help you take control of your AI journey, including:

AI Usage Audit

Comprehensive audits to uncover where and how AI tools are being used across your organisation—identifying shadow AI risks and opportunities for secure deployment.

Secure Deployment Frameworks

We help you implement enterprise-grade AI solutions with built-in compliance, governance, and transparency—ensuring tools like Microsoft Copilot are deployed safely and effectively.

Policy and Governance Design

Quorum works with your IT and compliance teams to establish robust AI usage policies, aligned with regulatory requirements and industry best practices.

Training and Awareness

We can deliver tailored training programmes to upskill your workforce, promote responsible AI use, and build a culture of digital trust.

Ongoing Support and Optimisation

AI adoption isn’t a one-off project. Quorum provides continuous support to monitor usage, refine policies, and optimise performance as your needs evolve.

Want to Chat?

Whether you’re just beginning your AI journey or looking to scale responsibly, Quorum can help you turn shadow AI from a risk into a strategic advantage.

AWARDS & RECOGNITION

FOLLOW US

CONTACT INFO

CONTACT INFO

Quorum

18 Greenside Lane Edinburgh

UK EH1 3AH

Phone: +44 131 652 3954

Email: marketing@quorum.co.uk

FOLLOW US

AWARDS & RECOGNITION